I attended the NVIDIA AI Summit in DC in October, and learned about how NVIDIA is shaping the future of artificial intelligence across multiple industries and domains. Here are my key takeaways and insights from this event.

The Rise of Accelerated Computing

The summit kicked off with an address from Bob Pette, NVIDIA’s VP of Enterprise Platforms, highlighting how accelerated computing is revolutionizing various sectors. What particularly caught my attention was the unprecedented advancement in processing efficiency - with some applications achieving a staggering 100,000x improvement in energy efficiency for inference operations. This leap forward isn’t just about raw performance; it’s about making AI more sustainable and accessible. The accelerated computing platform is driving breakthroughs across multiple domains:

- Sensor processing and digital twins

- Advanced cybersecurity systems

- Autonomous systems development

- Next-generation AI applications

Generative AI: From Theory to Practice

This session focused on NVIDIA’s generative AI platform. A particularly interesting session titled “From Theory to Practice: Accelerating Generative AI With NVIDIA’s Full-Stack Framework” provided deep insights into:

Technical Implementation Details

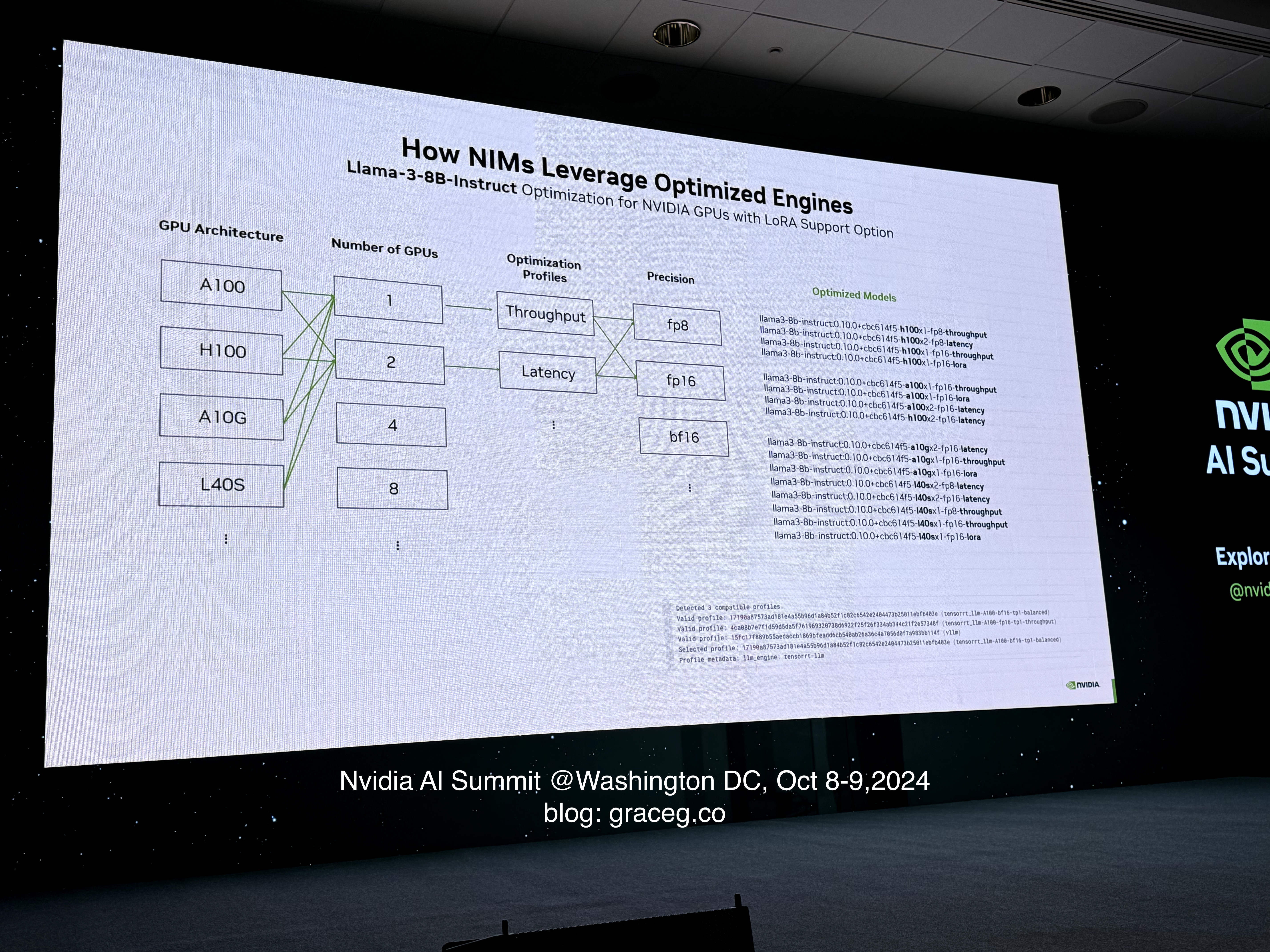

- Integration of NIM (NVIDIA Inference Microservices) for transforming traditional tools

- Best practices for prompt engineering and AI interaction

- Data preparation methodologies

- Model fine-tuning techniques

- Real-world applications

Enterprise-Scale Deployment

The session with Charlie Boyle revealed crucial insights about operationalizing AI at scale, including:

- Optimization strategies for AI training efficiency

- Infrastructure requirements for enterprise deployment

- Solutions for providing seamless access to data science teams

- Real-world use cases from current AI leaders

Advanced LLM Development and Optimization

The technical depth of the LLM-focused sessions was particularly impressive. In “The Art and Science of LLM Optimization,” presented by Aastha Jhunjhunwala, we explored:

Fine-Tuning Strategies

- Parameter-efficient methods like LoRA (Low-Rank Adaptation)

- Prefix Tuning techniques

- Domain adaptation strategies

- Resource optimization for inference

Data Processing at Scale

Mitesh Patel’s session on data processing revealed crucial insights about:

- Scalable architectures for LLM training

- Data quality optimization techniques

- Efficient processing pipelines

- Applications in code generation and translation

Factory of the Future: Sensor Fusion and Digital Twins

A fascinating session on manufacturing innovation showcased the integration of multiple cutting-edge technologies:

- Visual language models (VLMs) for automated inspection

- Sensor fusion techniques for comprehensive monitoring

- Digital twin implementation strategies

- Integration of generative AI in factory operations

Infrastructure and Computing Innovations

DGX Blackwell Clusters

The deep dive into DGX Blackwell cluster architecture revealed sophisticated design considerations:

- Advanced network fabric architecture

- Optimized interconnect systems

- Innovative cooling solutions (both air-cooled and liquid-cooled)

- Performance, power, and thermal trade-off optimizations

CUDA Platform Advancement

The CUDA session provided insights into:

- Latest platform capabilities

- Evolution of the GPU computing ecosystem

- Upcoming features and improvements

- Integration with modern AI frameworks

Physical AI and Robotics Integration

Rev Lebaredian’s strategic session unveiled NVIDIA’s comprehensive approach to physical AI:

Platform Integration

- OVX platform for high-fidelity simulation

- DGX systems for AI model training

- Jetson platform for edge inference

- Omniverse for collaborative, real-time simulations

Technical Capabilities

- Physics-accurate environmental modeling

- Real-time simulation capabilities

- Edge AI deployment strategies

- Integration of autonomous systems

NVIDIA is building more than just hardware and software components - they’re creating a comprehensive ecosystem for AI development and deployment. Key future-facing highlights include:

- RAG (Retrieval-Augmented Generation) innovations through NVIDIA NIM

- Advanced threat detection capabilities using AI

- Quantum computing challenges and AI-driven solutions

- Enhanced cybersecurity frameworks for AI systems

Final Thoughts

The NVIDIA AI Summit reinforced my belief that we’re at a pivotal moment in the AI revolution. The combination of hardware advances, software innovation, and focus on responsible development creates a foundation for transformative change across industries. The technical depth of the sessions, particularly around LLM optimization, sensor fusion, and infrastructure scaling, provided practical insights that will be invaluable for organizations looking to implement these technologies.

The challenge now lies in taking these insights and turning them into actionable strategies that can drive innovation and growth in our own organizations. The future of AI is not just about technology - it’s about how we use these tools to solve real problems and create meaningful impact.